#Jprofiler already running service install#

You can also install it as a separate application. It comes with JDK and can be start by running “ jvisualvm” command. VisualVM is a GUI tool that monitors and profiles a JVM. Connect VisualVM to an Application through JMX agents In this blog post, I will describe a couple of ways to monitor such Java applications.

But when the Java application runs inside a Docker container on a Docker host, it becomes challenging to monitor them using tools running locally, even when the Docker host is just a virtual machine running on your desktop. It’s usually straightforward to do that if an applications is running as a Java processes on your local machine.

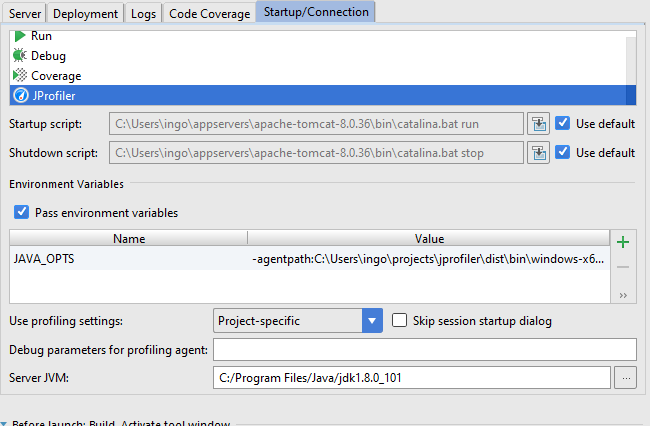

JPAGENT_PATH = "-agentpath:/usr/local/jprofiler10/bin/linux-圆4/libjprofilerti.Java developers need to monitor and trouble-shoot Java applications from time to time. You can configure your Pod to pull values from a Secret: apiVersion : v1 You will need to configure the Pod with correct environment variables/files through the use of Secrets or ConfigMaps. You also need to keep in mind that if you share the Volume between Pods, those Pods will not know about JProfiles libs being attached. So you can create a local type of Volume with JProfiles libs inside.Ī local volume represents a mounted local storage device such as a disk, partition or directory.

Kubernetes is using Volumes to share files between Containers. Of course you don't need to install the libraries on each containers separately.

#Jprofiler already running service portable#

They are easier to build than VMs, and because they are decoupled from the underlying infrastructure and from the host filesystem, they are portable across clouds and OS distributions. These containers are isolated from each other and from the host: they have their own filesystems, they can’t see each others’ processes, and their computational resource usage can be bounded. The New Way is to deploy containers based on operating-system-level virtualization rather than hardware virtualization. It's nicely explained why to use containers in the official documentation. Looks like you are missing the general concept here. Start JProfiler up locally and point it to 127.0.0.1, port 8849.Ĭhange the local port 8849 (the number to the left of :) if it isn't available then, point JProfiler to that different port.kubectl -n get pods) and set up port forwarding to it: To connect from the JProfiler's GUI to the remote JVM: Alternatively, start with zero replicas and scale to one when ready to start profiling. The reason is that with this configuration the profiling agent will receive its profiling settings from the JProfiler GUI.Ĭhange the application deployment to start with only one replica. That will cause the JVM to block at startup and wait for a JProfiler GUI to connect. Notice that there isn't a "nowait" argument. agentpath: /jprofiler/ bin /linux-圆4/ libjprofilerti.so=port= 8849 Add to the JVM startup arguments JProfiler as an agent:.Notice that the copy command will create /tmp/jprofiler directory under which the JProfiler installation will go - that is used as mount path. Replace /jprofiler/ above with the correct path to the installation directory in the JProfiler's image. It goes something like this (more details are in this blog article):Ĭommand : This way, the JVM can reference at startup time the JProfiler agent from the shared volume. Use the JProfiler image for an Init Container the Init Container copies the JProfiler installation to a volume shared between that Init Container and the other Containers that will be started in the Pod. The idea is to have an image for JProfiler separate from the application's image.

0 kommentar(er)

0 kommentar(er)